Background on the wordpress dashboard error message: “RSS Error: WP HTTP Error: name look-up timed out” — out of nowhere I got this error message on the dashboard for my wordpress blog and my pages were displaying very slowly, especially the widgets on the right hand column, even after updating my WooCommerce Recurring Payments.

It turns out that my server (it’s a fedora linux system running in my basement, I am the system admin) required a change to the DNS settings. I knew these changes were going to be needed, but I wasn’t sure when and this error was the first symptom that told me I should have planned ahead a little better.

I was changing ISPs and my home network DNS settings needed to change from 192.168.100.155 to 192.168.100.164.

- I edited my

/etc/resolv.conffile, - I edited the files under

/etc/sysconfig, and then - I restarted my web server.

And everything was fine. But it wasn’t as easy as it sounds. Here’s some notes for my reference and the potential benefit of others:

/etc/resolv.conf

For a quick test, go to a command line and try to ping yahoo.com. This didn’t work for me. My old ISP’s DNS server had gone offline. This was expected, and I need to update my settings.

The file /etc/resolv.conf is the key file that gets looked at for “every” DNS lookup. Anything on your Linux system that uses gethostbyname() checks this file. In theory all I have to do is edit this file and it should fix my “RSS Error: WP HTTP Error: name look-up timed out” error.

/etc/resolv.conf

# Generated by NetworkManager nameserver 192.168.100.164 # was 192.168.100.155

The quick test is to ping yahoo.com. Sure enough it works fine.

/etc/sysconfig

We know from experience, that the /etc/resolv.conf file is generated by the Fedora NetworkManager configuration service. So go into /etc/sysconfig and find all matches to the old IP address and switch it to the new. For example, I ran the following bash command line to find the files I needed to edit:

[root@kozik2 sysconfig]# grep -r 192.168.100.155 * | grep DNS networking/profiles/default/ifcfg-eth0:DNS1=192.168.100.155 networking/devices/ifcfg-eth0:DNS1=192.168.100.155 network-scripts/ifcfg-eth0:DNS1=192.168.100.155

I went into each of these files and edited the 155 to 164. This way, when the NetworkManager gets run next, my /etc/resolv.conf won’t revert back to its old settings.

Not enough, I needed to troubleshoot

So I thought I knew what I was doing. If I fixed the DNS IP address, but I was still getting the “RSS Error: WP HTTP Error: name lookup timed out” error on my blog’s dashboard and the posting pages were very very slow to display. Something still wasn’t right with wordpress.

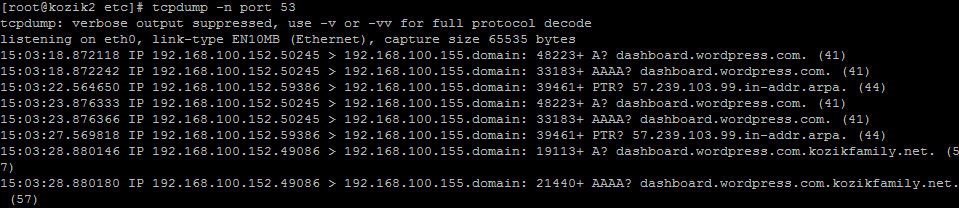

Since ping now worked, I decided to run tcpdump and see what’s going on:

tcpdump -n port 53 | grep 192.168.100.155

It just sat there. Good, I thought. Next, I then to retrieve my dashboard page, and then the tcpdump started rolling unsuccessful requests to the DNS server at .155 to resolve dashboard.wordpress.com. Ah, I forgot one last step!!

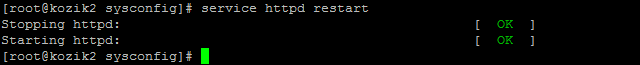

Don’t forget to restart the webserver!!

I forgot that on initialization, php reads from resolv.conf once and then continues to use that same IP address! A simple run of the following command fixed everything:

service httpd restart

I then refreshed my dashboard page and everything came up fast and normal.